I simply wanted to check how reliable it is to use HTML5 Video tag to receive live streams. The main issue with live or real-time streams is that you want them to be as close to real-time as possible. Such feature would allow for HTML5 web based applications for both video conferencing and remote-desktop.

Cool right? Well, not so fast! Video on browsers is rather complicated mainly due to the different flavors of packaging format for video and audio codec fragments. Have a look in Wikipedia HTML5 Video format support table to understand what I’m talking about.

For this example I’m going to focus on three different browsers, Google Chrome, Firefox and Opera Next (mainly because WebGL will be used). Unfortunately Apple’s Safari does not support WebGL on Windows platforms. Still, and when it comes to live streaming, I’m confident that Safari would be the best option since Apple iOS explicitly states streaming support. Internet Explorer simply does not support WebGL at all.

WebGL is a recent web standard specification that brings hardware accelerated graphics to the browser world and it is now supported by all the browsers mentioned above. In some cases you will have to explicitly enable this feature since it is still an experimental feature:

Google Chrome – Make sure your browser is updated to the latest version and, if using Windows XP you might need to add “–ignore-gpu-blacklist” option to the Chrome command line.

Firefox – I used Firefox 7 and 10 (Nightly build). In either, WebGL can be enabled by writing about:config in the URL entry, set the property filter to webgl. Set webgl.force-enabled and webgl.prefer-native-gl to true.

You can check if you have WebGL working by visiting Google Chrome Experiments on WebGL.

Step 1 – Select the Streaming Codec

There’s an on-going war on which codec should be the right one for the web browsing experience. Currently only H264 is hardware accelerated but unfortunately it is not supported by Opera and Firefox since it is not an open format. Chrome does support it but not for long. Instead, Chrome is now promoting WebM/VP8/Vorbis format which, at this stage, only Chrome supports. Fortunately, they all support Ogg/Theora/Vorbis format.

So, and for the sake of this experiment, it does make sense to go with Ogg for encapsulation, Theora for video codec and Vorbis for sound codec (not that I really care about sound for this experiment). In addition, I’ll also explain how to stream using WebM for Chrome.

Step 2 – Create a simple HTML5 video page

videoTest.html

<!DOCTYPE html> <html> <head> <title>Video Test</title> <meta http-equiv="content-type" content="text/html; charset=ISO-8859-1"> <META HTTP-EQUIV="Pragma" CONTENT="no-cache"> </head> <body> <video id="video" src="http://oggtv.com/AVATAR/ad.ogg" autoplay="autoplay"> Your browser doesn't appear to support the HTML5 <code><video></code> element. </video> </body> </html>

If you open the above html file in, say, Google Chrome and wait for a few seconds (depending on network speed) you should see an Avatar video being played.

Step 3 – Create a Ogg/Theora live stream of your desktop

For this I used Video LAN’s VLC and started it with the following command line:

"C:\Program Files\VideoLAN\VLC\vlc.exe" -I dummy screen:// :screen-fps=16.000000 :screen-caching=100 :sout=#transcode{vcodec=theo,vb=800,scale=1,width=600,height=480,acodec=mp3}:http{mux=ogg,dst=127.0.0.1:8081/desktop.ogg} :no-sout-rtp-sap :no-sout-standard-sap :ttl=1 :sout-keep

The above command line instructs VLC to do a screen capture of the desktop at 16 fps using Ogg/Theora format and stream it through an embedded HTTP server started on port 8081. You can play with the FPS number if you are having too much CPU resource hogging.

Now edit the videoTest.html file and replace the line:

<video id="video" src="http://oggtv.com/AVATAR/ad.ogg" autoplay="autoplay">

by one containing the streaming url:

<video id="video" src="http://localhost:8081/desktop.ogg" autoplay="autoplay">

Most browsers will auto-detect the streaming format but if you want you can always help them by providing the streaming formats in the video tag like this:

<video id="video" src="http://localhost:8081/desktop.ogg" type="video/ogg; codecs=theora" autoplay="autoplay">

To test it, I recommend opening the file with either Firefox or Opera Next. To make it work we will have to drop the html file in a web server. I’ll use Apache.

Step 4 – Install and Configure Apache Web Server

Installation: If you don’t have it yet, you can download and follow the instructions provided at Apache Server web page.

Deploy web page: Once installed find the APACHE_HOME/htdocs directory and create a sub-directory called desktop and copy videoTest.html file into it.

Configure Reverse Proxy: We need to do this to bypass some WebGL/HTML5 Video security restrictions. Edit the file APACHE_HOME/conf/httpd.conf file and, at the bottom add the following lines:

# Mod_proxy Module ProxyReceiveBufferSize 16384 ProxyRequests On ProxyVia On ProxyPreserveHost On <Proxy *> Order deny,allow Allow from all </Proxy> # VLC server stream ProxyPass /desktop/stream.ogg http://localhost:8081/desktop.ogg ProxyPassReverse /desktop/stream.ogg http://localhost:8081/desktop.ogg

You’ll also need to remove the comments of the following lines in the top of the Apache config document:

LoadModule proxy_module modules/mod_proxy.so LoadModule proxy_balancer_module modules/mod_proxy_balancer.so LoadModule proxy_connect_module modules/mod_proxy_connect.so LoadModule proxy_http_module modules/mod_proxy_http.so

Start or restart the Apache Web Server and point a browser (Firefox or Opera) to localhost/desktop/videoTest.html.

You will notice that it might become quite slow Ogg/Theora is not hardware accelerated and, on the top of that, you will notice that the browsers will actually buffer the video and there’s no way to tell it to not buffer.

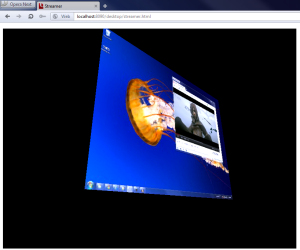

Step 5 – Make it look cool with WebGL

This is a simple JavaScript application that takes advantage of WebGL JavaScript API provided by browser canvas element. For vector and matrices manipulation I’m using the minimal version of glMatrix JavaScript lib.

streamer.html

<!DOCTYPE html>

<html>

<head>

<title>Streamer</title>

<meta http-equiv="content-type" content="text/html; charset=ISO-8859-1">

<META HTTP-EQUIV="Pragma" CONTENT="no-cache">

<link rel="stylesheet" href="assets/webgl.css" type="text/css">

<script type="text/javascript" src="libs/glMatrix-0.9.5.min.js"></script>

<script type="text/javascript" src="libs/webgl-utils.js"></script> <!-- for requestAnimFrame method -->

<script id="shader-fs" type="x-shader/x-fragment">

varying highp vec2 vTextureCoord;

varying highp vec3 vLighting;

uniform sampler2D uSampler;

void main(void) {

highp vec4 texelColor = texture2D(uSampler, vec2(vTextureCoord.s, vTextureCoord.t));

gl_FragColor = vec4(texelColor.rgb * vLighting, texelColor.a);

}

</script>

<script id="shader-vs" type="x-shader/x-vertex">

attribute highp vec3 aVertexNormal;

attribute highp vec3 aVertexPosition;

attribute highp vec2 aTextureCoord;

uniform highp mat4 uNormalMatrix;

uniform highp mat4 uMVMatrix;

uniform highp mat4 uPMatrix;

varying highp vec2 vTextureCoord;

varying highp vec3 vLighting;

void main(void) {

gl_Position = uPMatrix * uMVMatrix * vec4(aVertexPosition, 1.0);

vTextureCoord = aTextureCoord;

// Apply lighting effect

highp vec3 ambientLight = vec3(0.6, 0.6, 0.6);

highp vec3 directionalLightColor = vec3(0.5, 0.5, 0.75);

highp vec3 directionalVector = vec3(0.85, 0.8, 0.75);

highp vec4 transformedNormal = uNormalMatrix * vec4(aVertexNormal, 1.0);

highp float directional = max(dot(transformedNormal.xyz, directionalVector), 0.0);

vLighting = ambientLight + (directionalLightColor * directional);

}

</script>

<script type="text/javascript">

var gl;

var canvas; // Canvas element

var videoElement; // Video element

var videoTexture; // Video texture

// GL Buffers - Object also holds items and item length

var planeVertexPositionBuffer; // Surface def points

var planeVertexTextureCoordBuffer; // Texture coords

var planeVerticesNormalBuffer; // Lighting normals

var planeVertexIndexBuffer; // Drawing indexes

var shaderProgram; // Shader Application - Object will also be used to hold respective attribute

// Matrices

var mvMatrix = mat4.create();

var pMatrix = mat4.create();

var normalMatrix = mat4.create();

var xRot = 0;

var xSpeed = 0;

var yRot = 0;

var ySpeed = 0;

var z = -5.0;

var currentlyPressedKeys = {};

function initWebGL(canvas) {

try {

gl = canvas.getContext("experimental-webgl");

gl.viewportWidth = canvas.width;

gl.viewportHeight = canvas.height;

} catch (e) {

}

if (!gl) {

alert("Could not initialise WebGL, sorry :-(");

}

}

function getShader(gl, id) {

var shaderScript = document.getElementById(id);

if (!shaderScript) {

return null;

}

var str = "";

var k = shaderScript.firstChild;

while (k) {

if (k.nodeType == 3) {

str += k.textContent;

}

k = k.nextSibling;

}

var shader;

if (shaderScript.type == "x-shader/x-fragment") {

shader = gl.createShader(gl.FRAGMENT_SHADER);

} else if (shaderScript.type == "x-shader/x-vertex") {

shader = gl.createShader(gl.VERTEX_SHADER);

} else {

return null;

}

gl.shaderSource(shader, str);

gl.compileShader(shader);

if (!gl.getShaderParameter(shader, gl.COMPILE_STATUS)) {

alert(gl.getShaderInfoLog(shader));

return null;

}

return shader;

}

function initShaders() {

var fragmentShader = getShader(gl, "shader-fs");

var vertexShader = getShader(gl, "shader-vs");

shaderProgram = gl.createProgram();

gl.attachShader(shaderProgram, vertexShader);

gl.attachShader(shaderProgram, fragmentShader);

gl.linkProgram(shaderProgram);

if (!gl.getProgramParameter(shaderProgram, gl.LINK_STATUS)) {

alert("Could not initialise shaders");

}

gl.useProgram(shaderProgram);

shaderProgram.vertexPositionAttribute = gl.getAttribLocation(shaderProgram, "aVertexPosition");

gl.enableVertexAttribArray(shaderProgram.vertexPositionAttribute);

shaderProgram.vertexNormalAttribute = gl.getAttribLocation(shaderProgram, "aVertexNormal");

gl.enableVertexAttribArray(shaderProgram.vertexNormalAttribute);

shaderProgram.textureCoordAttribute = gl.getAttribLocation(shaderProgram, "aTextureCoord");

gl.enableVertexAttribArray(shaderProgram.textureCoordAttribute);

}

function degToRad(degrees) {

return degrees * Math.PI / 180;

}

function handleKeyDown(event) {

currentlyPressedKeys[event.keyCode] = true;

}

function handleKeyUp(event) {

currentlyPressedKeys[event.keyCode] = false;

}

function handleKeys() {

if (currentlyPressedKeys[33]) {

// Page Up

z -= 0.05;

}

if (currentlyPressedKeys[34]) {

// Page Down

z += 0.05;

}

if (currentlyPressedKeys[37]) {

// Left cursor key

ySpeed -= 1;

}

if (currentlyPressedKeys[39]) {

// Right cursor key

ySpeed += 1;

}

if (currentlyPressedKeys[38]) {

// Up cursor key

xSpeed -= 1;

}

if (currentlyPressedKeys[40]) {

// Down cursor key

xSpeed += 1;

}

}

function initBuffers() {

planeVertexPositionBuffer = gl.createBuffer();

gl.bindBuffer(gl.ARRAY_BUFFER, planeVertexPositionBuffer);

var vertices = [

// Front face

-2.0, -1.5, 1.0,

2.0, -1.5, 1.0,

2.0, 1.5, 1.0,

-2.0, 1.5, 1.0

];

gl.bufferData(gl.ARRAY_BUFFER, new Float32Array(vertices), gl.STATIC_DRAW);

planeVertexPositionBuffer.itemSize = 3;

planeVertexPositionBuffer.numItems = 4;

planeVerticesNormalBuffer = gl.createBuffer();

gl.bindBuffer(gl.ARRAY_BUFFER, planeVerticesNormalBuffer);

var vertexNormals = [

// Front face normals

0.0, 0.0, 1.0,

0.0, 0.0, 1.0,

0.0, 0.0, 1.0,

0.0, 0.0, 1.0

];

gl.bufferData(gl.ARRAY_BUFFER, new Float32Array(vertexNormals), gl.STATIC_DRAW);

planeVerticesNormalBuffer.itemSize = 3;

planeVerticesNormalBuffer.numItems = 4;

planeVertexTextureCoordBuffer = gl.createBuffer();

gl.bindBuffer(gl.ARRAY_BUFFER, planeVertexTextureCoordBuffer);

var textureCoords = [

// Front face

0.0, 0.0,

1.0, 0.0,

1.0, 1.0,

0.0, 1.0

];

gl.bufferData(gl.ARRAY_BUFFER, new Float32Array(textureCoords), gl.STATIC_DRAW);

planeVertexTextureCoordBuffer.itemSize = 2;

planeVertexTextureCoordBuffer.numItems = 4;

planeVertexIndexBuffer = gl.createBuffer();

gl.bindBuffer(gl.ELEMENT_ARRAY_BUFFER, planeVertexIndexBuffer);

var planeVertexIndices = [

0, 1, 2, 0, 2, 3, // Front face

]

gl.bufferData(gl.ELEMENT_ARRAY_BUFFER, new Uint16Array(planeVertexIndices), gl.STATIC_DRAW);

planeVertexIndexBuffer.itemSize = 1;

planeVertexIndexBuffer.numItems = 6;

}

function initTexture() {

videoTexture = gl.createTexture();

videoTexture.image = new Image();

videoTexture.image.onload = function() {

handleLoadedTexture(videoTexture)

}

videoTexture.image.src = "media/snapshot.png";

}

function handleLoadedTexture(texture) {

try {

gl.pixelStorei(gl.UNPACK_FLIP_Y_WEBGL, true);

gl.bindTexture(gl.TEXTURE_2D, texture);

gl.texImage2D(gl.TEXTURE_2D, 0, gl.RGBA, gl.RGBA, gl.UNSIGNED_BYTE, texture.image);

gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_WRAP_S, gl.CLAMP_TO_EDGE);

gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_WRAP_T, gl.CLAMP_TO_EDGE);

gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MAG_FILTER, gl.NEAREST);

gl.texParameteri(gl.TEXTURE_2D, gl.TEXTURE_MIN_FILTER, gl.NEAREST);

gl.bindTexture(gl.TEXTURE_2D, null);

} catch (e) {

var log = document.getElementById("logger");

log.innerHTML = log.innerHTML + '<BR>' + e.message;

}

}

function insertVideo(video) {

videoTexture.image = video;

handleLoadedTexture(videoTexture)

}

function drawScene() {

gl.clear(gl.COLOR_BUFFER_BIT | gl.DEPTH_BUFFER_BIT);

gl.viewport(0, 0, gl.viewportWidth, gl.viewportHeight);

mat4.perspective(60, gl.viewportWidth / gl.viewportHeight, 0.1, 100.0, pMatrix);

mat4.identity(mvMatrix);

mat4.translate(mvMatrix, [0.0, 0.0, z]);

mat4.rotate(mvMatrix, degToRad(xRot), [1, 0, 0]);

mat4.rotate(mvMatrix, degToRad(yRot), [0, 1, 0]);

gl.bindBuffer(gl.ARRAY_BUFFER, planeVertexPositionBuffer);

gl.vertexAttribPointer(shaderProgram.vertexPositionAttribute, planeVertexPositionBuffer.itemSize, gl.FLOAT, false, 0, 0);

gl.bindBuffer(gl.ARRAY_BUFFER, planeVertexTextureCoordBuffer);

gl.vertexAttribPointer(shaderProgram.textureCoordAttribute, planeVertexTextureCoordBuffer.itemSize, gl.FLOAT, false, 0, 0);

gl.bindBuffer(gl.ARRAY_BUFFER, planeVerticesNormalBuffer);

gl.vertexAttribPointer(shaderProgram.vertexNormalAttribute, planeVerticesNormalBuffer.itemSize, gl.FLOAT, false, 0, 0);

gl.activeTexture(gl.TEXTURE0);

gl.bindTexture(gl.TEXTURE_2D, videoTexture);

gl.uniform1i(gl.getUniformLocation(shaderProgram, "uSampler"), 0);

gl.bindBuffer(gl.ELEMENT_ARRAY_BUFFER, planeVertexIndexBuffer);

setMatrixUniforms();

gl.drawElements(gl.TRIANGLES, planeVertexIndexBuffer.numItems, gl.UNSIGNED_SHORT, 0);

}

var lastTime = 0;

function animate() {

var timeNow = new Date().getTime();

if (lastTime != 0) {

var elapsed = timeNow - lastTime;

xRot += (xSpeed * elapsed) / 1000.0;

yRot += (ySpeed * elapsed) / 1000.0;

}

lastTime = timeNow;

}

function tick() {

insertVideo(document.querySelector("video"));

handleKeys();

drawScene();

animate();

}

function setMatrixUniforms() {

shaderProgram.pMatrixUniform = gl.getUniformLocation(shaderProgram, "uPMatrix");

gl.uniformMatrix4fv(shaderProgram.pMatrixUniform, false, pMatrix);

shaderProgram.mvMatrixUniform = gl.getUniformLocation(shaderProgram, "uMVMatrix");

gl.uniformMatrix4fv(shaderProgram.mvMatrixUniform, false, mvMatrix);

mat4.inverse(mvMatrix, normalMatrix);

mat4.transpose(normalMatrix, normalMatrix);

shaderProgram.normalMatrixUniform = gl.getUniformLocation(shaderProgram, "uNormalMatrix");

gl.uniformMatrix4fv(shaderProgram.normalMatrixUniform, false, normalMatrix);

}

function webGLStart() {

canvas = document.getElementById("streamer-canvas");

videoElement = document.getElementById("video");

initWebGL(canvas);

gl.clearColor(0.0, 0.0, 0.0, 1.0);

gl.clearDepth(1.0); // Clear everything

gl.enable(gl.DEPTH_TEST); // Enable depth testing

gl.depthFunc(gl.LEQUAL); // Near things obscure far things

initShaders();

initBuffers();

initTexture();

document.onkeydown = handleKeyDown;

document.onkeyup = handleKeyUp;

setInterval(tick, 15);

}

</script>

</head>

<body onload="webGLStart();">

<p id="logger"/>

<canvas id="streamer-canvas" style="border: none;" width="800" height="600"></canvas>

<video id="video" src="http://localhost:8090/desktop/stream.ogg" autoplay="autoplay">

Your browser doesn't appear to support the HTML5 <code><video></code> element.

</video>

<h2>Controls:</h2>

<ul>

<li><code>Page Up</code>/<code>Page Down</code> to zoom out/in

<li>Cursor keys: make the plane rotate (the longer you hold down a cursor key, the more it accelerates)

<li><code>F</code> to toggle through three different kinds of texture filters

</ul>

</body>

</html>

Step 6 – Live Web-M Streaming

For Web-M streaming you’ll need the following tools:

Screen Capture – A simple screen capture filter for Direct Show. Windows installer is available. For this application to work you might also need DirectX SDK and VC++ 2010 Redistributable Package for x86 installed.

FFmpeg – Static/binary build.

Stream-M – A simple little tool to publish media streams. This is a Java application and as such you’ll have to install Java. I used JDK 6.

To install them, just unzip them into a directory of your choice.

Next, you’ll need to start the Stream-M server:

cd into the Stream-M directory where you’ve unpacked it and execute the following command:

java.exe -cp lib/stream-m.jar StreamingServer server.conf

Than you need to start the publishing your desktop using FFmpeg:

ffmpeg.exe -f dshow -i video="screen-capture-recorder" -r 1 -g 2 -vcodec libvpx -vb 1024 -f matroska http://localhost:8080/publish/first?password=secret

The next step is to edit and modify the httpd.conf file to support this new stream source by adding the new reverse proxy info to the bottom of the file:

ProxyPass /desktop/stream http://localhost:8080/consume ProxyPassReverse /desktop/stream http://localhost:8080/consume

Make sure you restart Apache web server.

Finally, change the video tag in streamer.html to be:

<video id="video" src="http://localhost/desktop/stream/first" autoplay="autoplay">

Start your Google Chrome browser and point it to http://localhost/desktop/streamer.html

Conclusion

Even though the HTML5 video specs provide room for streams, so far my conclusion is that HTML5 video is not nearly ready for live streaming. In my experiments the video tag would always buffer and I could not find a way to have it disabled and this ends-up causing a lag of at least 5 to 8 seconds.

For now, and when it comes to live streaming, Flash and RTMP/RTMTP based solutions are still the way to go.

Download source code here. Make sure you rename the file to .7z and extract it using 7-Zip tool.

In firefox I run the code of avatar video. But the later example does not work. Can you help me? I run the command of capturing the desktop through VLC software successfully as the given command:

“C:\Program Files (x86)\VideoLAN\VLC\vlc.exe” -I dummy screen://:screen-fps=16.000000 :screen-caching=100:sout=#transcode{vcodec=theo,vb=800,scale=1,width=600,height=480,acodec=mp3} :http{mux=ogg,dst=127.0.0.1:8081/desktop.ogg}

Then I configured Apache as required but the code of videoTest.html does not show the captured video from VLC.

Abdullah,

I’m so sorry for the delayed response. Just upgraded to a new laptop and I haven’t had the time to check your issue.

Hopefully, you’ve already figured the issue out by now.

It happens that there was a typo on the provided sample. Basically, the settings were requesting VLC to stream it to “localhost:8081/desktop.ogg” and the HTML5 sample was looking for it at “localhost:80/desktop/stream.ogg”. They both need to be the same URL.

I’ve tested with latest version of VLC, Chrome and Firefox and was able to confirm that it works just fine.

Good luck

Great Post!!!!

Done, Thank you man

Hey! how do capture my webcam ?

Read notes in Step 6.

You can also try VLC, ffmpeg, FlashMediaLiveEncoder 3.2 (Free version) or other streaming tools like SarXos Web Capture lib (http://webcam-capture.sarxos.pl).

I’m having trouble getting this to work in IE, anyone have a solution?

When this was first tested, IE did not yet support WebGL. That’s why I totally ignored it. IE 11 supports WebGL but I’m yet to have the time and opportunity to play with it.